Test your AI voice agents against real attacks

A single misconfigured voice agent can leak sensitive data, misuse internal tools, or make commitments your business never approved – often without anyone noticing. We uncover where your agents are already vulnerable, before it becomes expensive.

What your AI voice agents put at risk

AI voice agents are built on LLMs that work probabilistically: they sound confident, but they are not deterministic. The same request can lead to very different answers, hallucinations, or unexpected actions depending on context.

That is why production voice setups need a second line of defense: technical guardrails, automated testing, and monitoring. With the EU AI Act, the pressure grows to visibly monitor AI systems and continuously reduce risk.

When the agent talks directly to customers

A voice agent answers questions about contracts or invoices – and promises goodwill, discounts, or deferrals that are not covered by your policies. Individual wrong commitments quickly scale with every additional call.

When the agent is wired into internal systems

Your voice agent can create tickets, modify customer data, or trigger payments. A jailbreak or clever prompt variation can be enough to trigger unintended actions or expose sensitive information.

When monitoring and governance are missing

Without structured tests and reporting, you have no clear view of what your agent actually says today. Internal risk teams – and regulators in the context of the EU AI Act – lack the basis to assess risk and quality in a transparent way.

How exposed AI voice systems already are

Behind every polished demo there is a messy reality: automated attacks, misconfigurations, and incidents that never make it into a public report. The question is less if someone tries to break your agents – and more whether you notice it.

estimated automated probing attempts per day across publicly exposed AI interfaces (email, chat, voice, web)

of companies report at least one security or compliance incident linked to AI or automation in the last 12 months

of incidents are actually reported – many are discovered late or not at all. Your agents might already have been abused without anyone noticing.

How centerbit builds AI agent security with you

From the first test run to continuous monitoring: we turn security into a repeatable process instead of a one-off project.

Initial test run: We run an initial targeted attack campaign against your existing voice agents – using realistic attack patterns without disrupting your production traffic.

Structured report: You receive a structured report with risk scores, reproducible examples, and a clear prioritization of findings.

Joint review session: In a joint workshop we walk through the results, translate them into business and technical impact, and derive concrete decisions for your team.

System hardening: Based on the report you adapt prompts, policies, and integrations – optionally together with us – and close the identified gaps.

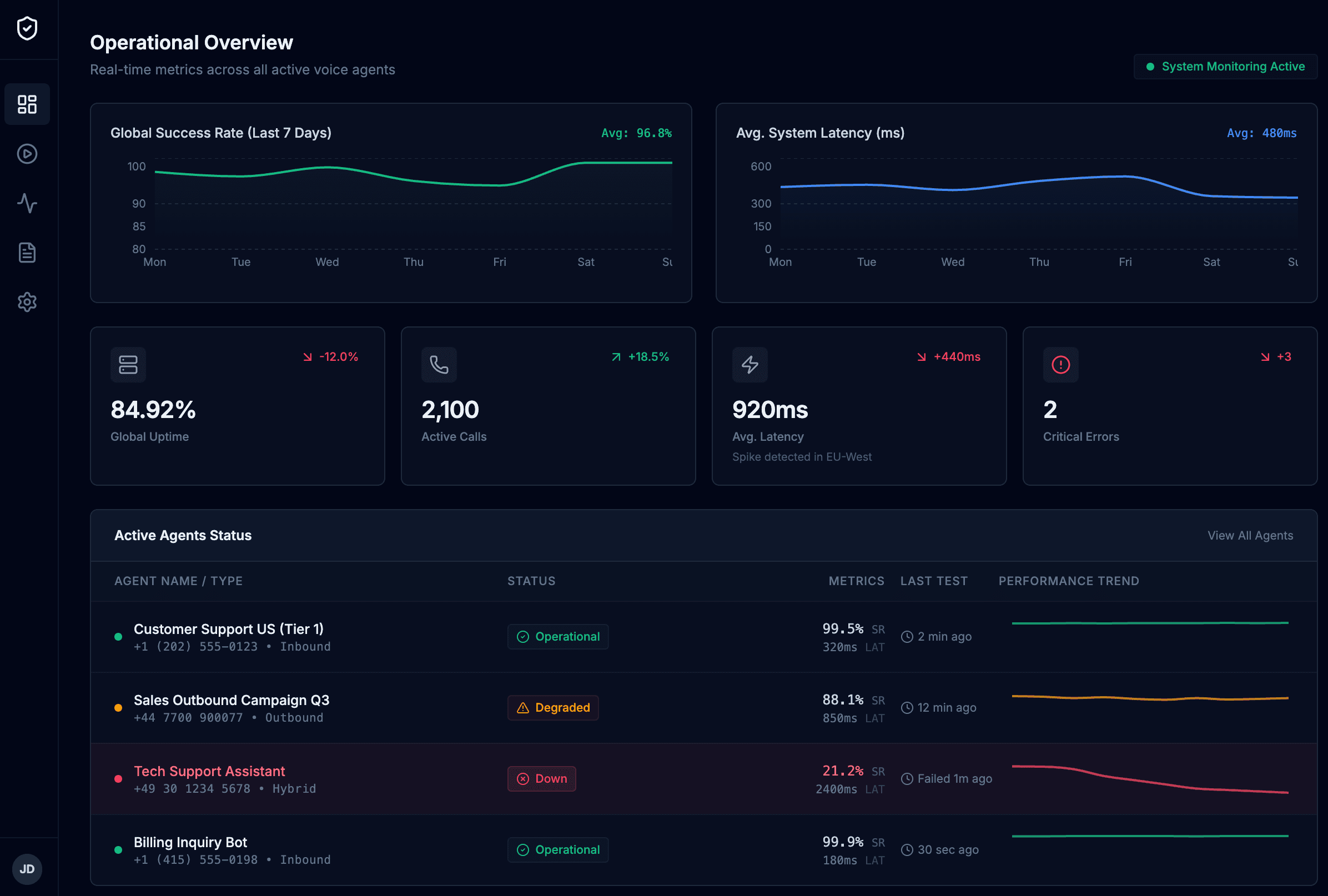

Continuous regression tests: Afterwards recurring regression tests keep running: every relevant change to prompts or models can be tested automatically and made visible in your dashboard.

Internal platform for monitoring and on-demand tests

The platform makes testing and monitoring your voice agents simple and repeatable.

- Ad-hoc test runs

- Continuous regression tests

- Uptime monitoring & alerts

- One-click reports

- Multi-agent overview

Attacks we simulate for you

We combine known weaknesses from recent research papers, public jailbreak databases, and our own attack patterns from real projects.

Prompt injection & jailbreaks

We test systematic attempts to override system prompts, shift policy boundaries, and gradually erode security instructions – including multi-step conversation chains.

Data leakage & compliance violations

Can a user indirectly query internal information, sensitive customer data, or internal tools? We identify where your current answers already reveal more than they should.

Tool and workflow abuse

As soon as your voice agent can perform external actions (e.g. sending emails, creating tickets, preparing payments), we specifically test abuse scenarios and unwanted side effects.

Security you can measure, not just hope for

attack patterns tested (including known jailbreak and prompt-injection techniques)

average success rate of critical attacks during first runs in new setups

continuous regression tests possible – every change can be checked automatically

In-depth whitepaper on AI voice security

In our whitepaper we cover common weaknesses in voice agents, concrete attack paths, and best practices for guardrails, monitoring, and continuous testing.

Test your voice agents under real-world conditions

We combine hands-on automation experience with a deep understanding of modern LLM security. Talk to us about your use case – or start directly with an initial test run.